.png)

Computer Vision has many different applications for a variety of use cases. We at cnvrg.io strive to make the process of building your ML automation pipeline that much easier and more intuitive. Using your own custom Automated Machine Learning (AutoML) solution you can automate the process of applying machine learning to real-world problems and tailor it specifically to your own applications and data. Building a custom AutoML pipeline will help you to control the process end to end – from planning to production, in the most straightforward and hassle free way.

As the demand for image classification systems keep growing, computer vision, which is used for many use cases such as fashion, e-commerce, nature, agriculture, manufacturing, security and more, is now more readily available in creating models thanks to deep learning methods and advanced research in the field.

There are differing approaches on tackling Computer Vision AutoML. We’ll cover a couple of prominent and commonly used methods.

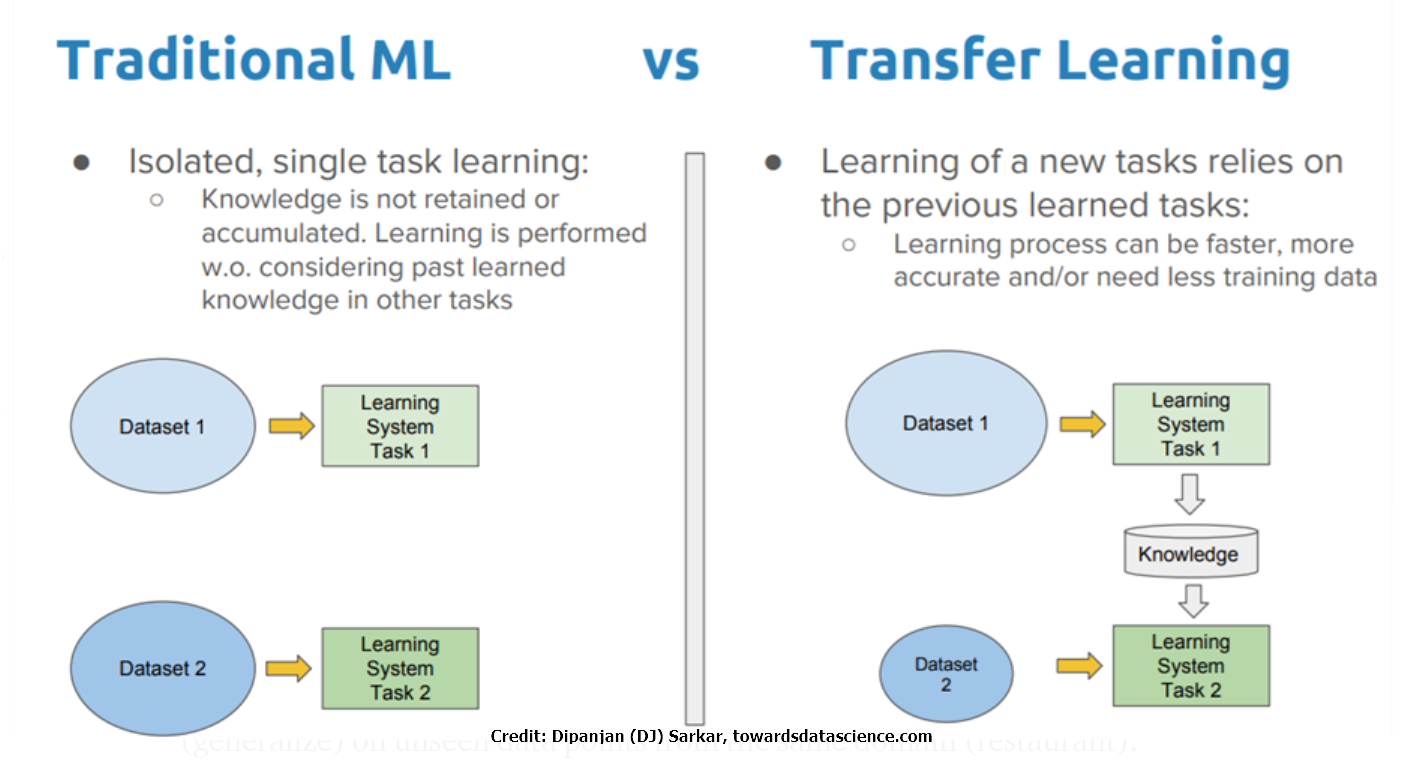

Transfer Learning is a deep learning method enabling developers to utilize an existing neural network which has already been used successfully for one task, and apply it to a different domain.

Deep Learning Neural Architecture Search (NAS) is a method which completely automates the design of the neural network.

Transfer Learning

As mentioned earlier, this deep learning method will allow you to take an existing neural network which you have already used and apply it to a different data set. This approach is particularly useful when you don’t have a lot of data, and when your original pre-built model has learned using the general features. Since there are a lot of pre-built models, it makes things easier. The method is considered very simple and easy to use, and can work using as little as two lines of code.

Neural Architecture Search (NAS)

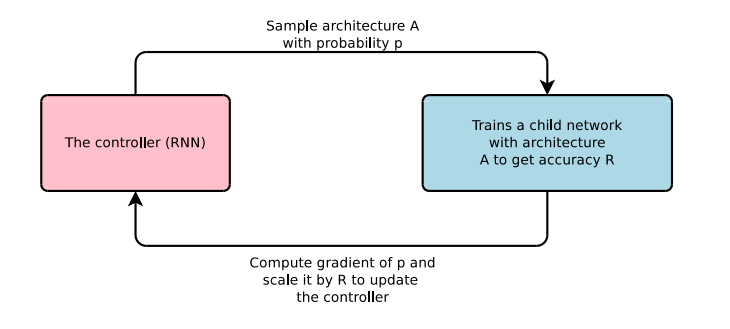

NAS is actually an algorithm which searches for the most adequate neural network architecture, best fitted for your dataset. It utilizes an RNN Network (A controller) which is trained in a loop, sampling possible architectures, training them, and using the resulting performance as bearing points to build better suited architectures.

The optimization method can be carried out in several ways: through reinforcement learning, via evolutionary search, or using Bayesian Optimization, to name a few.

Essentially, the controller (RNN) samples a specific architecture with a certain probability (p), trains a child network with that architecture to obtain a specific level of accuracy (R), then calculates the p gradient and scales it using R to update the controller, as illustrated below.

Although NAS was originally considered computationally intensive, in one case requiring 450 GPUs for 4 days to train a single model at Google, there are now more efficient ways and methods to use NAS, making it a more feasible option.

Building Your AutoML Computer Vision Pipeline

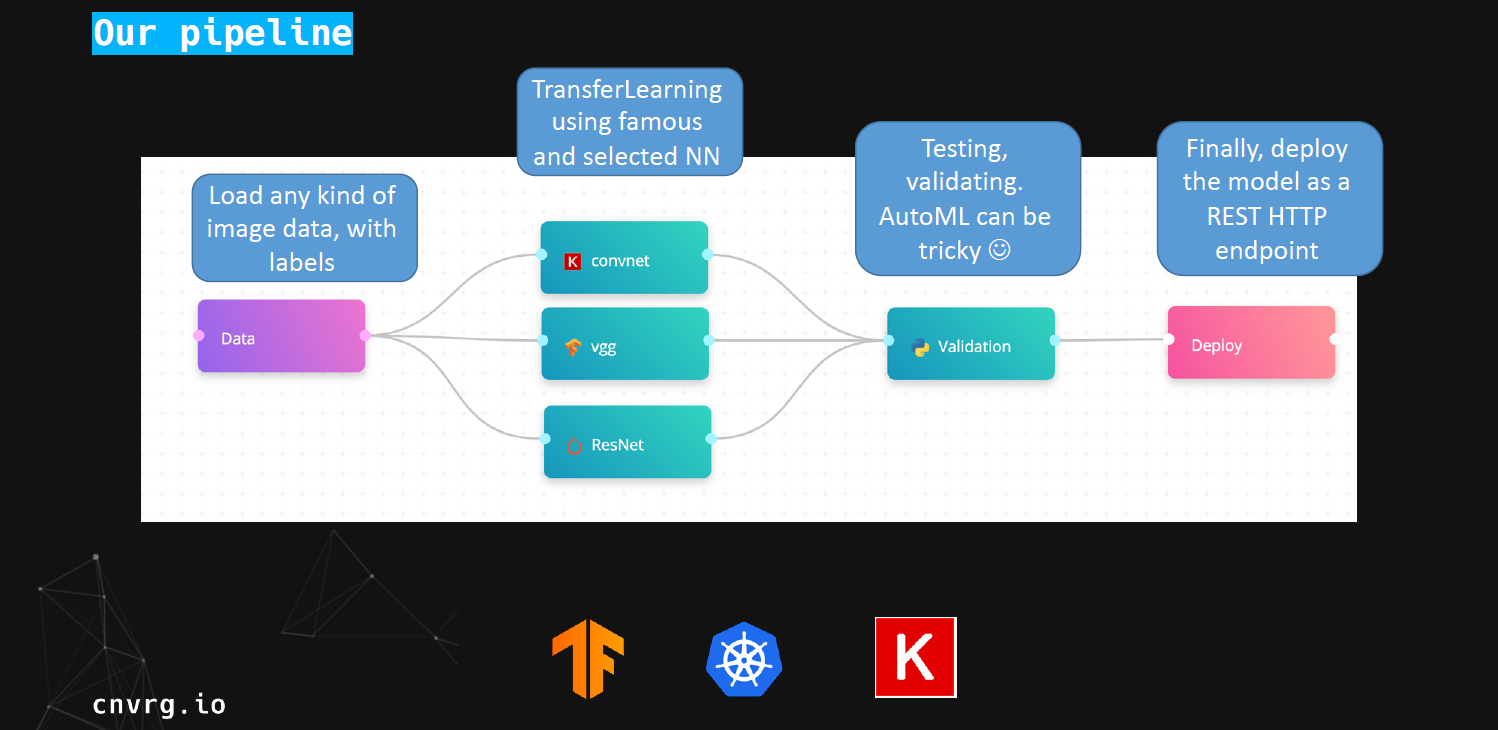

Let’s build a hypothetical pipeline using cnvrg.io. We’ll use Keras on TensorFlow, utilize transfer learning to keep it simple, use Kubernetes for the backend pipeline, with cnvrg.io putting it all together with model management and simplifying the process. Choose and load the appropriate base model, load the data generator and (later on) fine tune your model. Cut the last layers and re-train them with your data. At the end of the process, we can deploy the model to production.

Our pipeline should meet the following criteria:

- High performance - speed, accuracy, system etc.

- It must be reproducible

- It must be efficient

- End-to-end process - from training to production

We’ll start by loading the image data, which would be labeled, as our initial data set. We’ll then proceed to using transfer learning - using well known existing neural networks such as ConvNet, VGG and ResNet. Our system will test, run the experiments, validate the model and finally deploy the model as a REST HTTP endpoint, as can be seen below. We suggest using Kubernetes as your backend due to its flexibility.

Practical And Better - Easier With cnvrg.io

Not only is building your own AutoML solution practical and scalable, it will yield better results than using external solutions, and is automated into an easy workflow with cnvrg.io. It is important to take the time to create an end-to-end pipeline, as opposed to just focusing on training or deploying one, in order to make AI accessible to your entire organization. Last but not least, make sure to track everything meticulously, including your data, your code, experiments, models and predictions. With cnvrg you’ll have one platform to manage (dashboards, tracking and data visualization), build (any tool, no configuration required) and automate (from research to production).

Want to see with your own eyes how to build your AutoML pipeline? Check out this free webinar recording for a hands-on demonstration.